Inspiration

Our initial vision was to develop a game in a 10-week span that could leverage some of the multi-modalities that the Apple vision pro provides (particularly gaze and gesture) and also be easily feasible in our timeframe. We believed that a fPS Zombie apocalypse game would be the best suited for this.

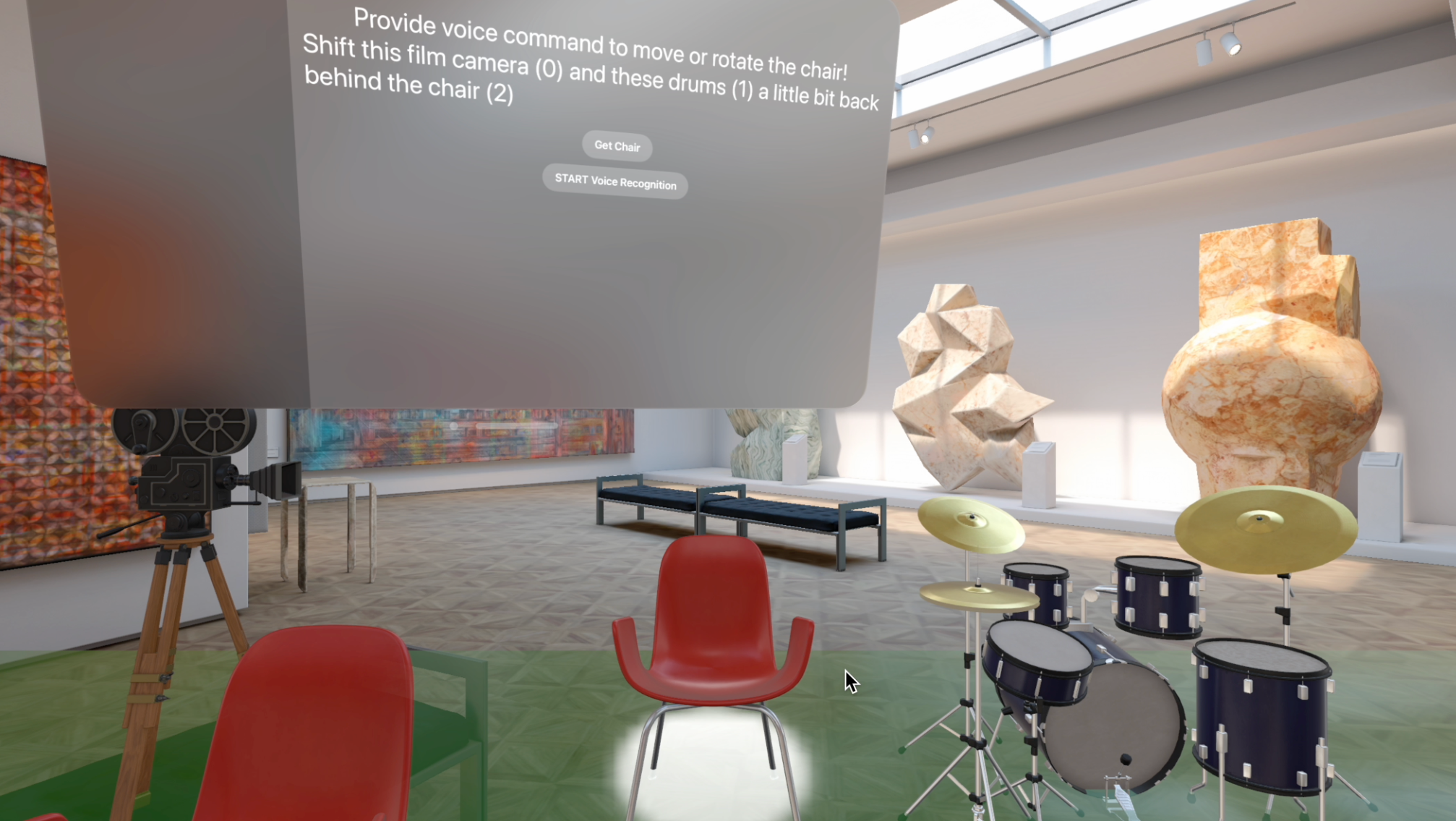

In an AR environment, you can have real-life custom scenes that depend on the User's environment, instead of static, predefined environments.

This also Allows for dynamic physics and real-world interaction between virtual and real objects and entities

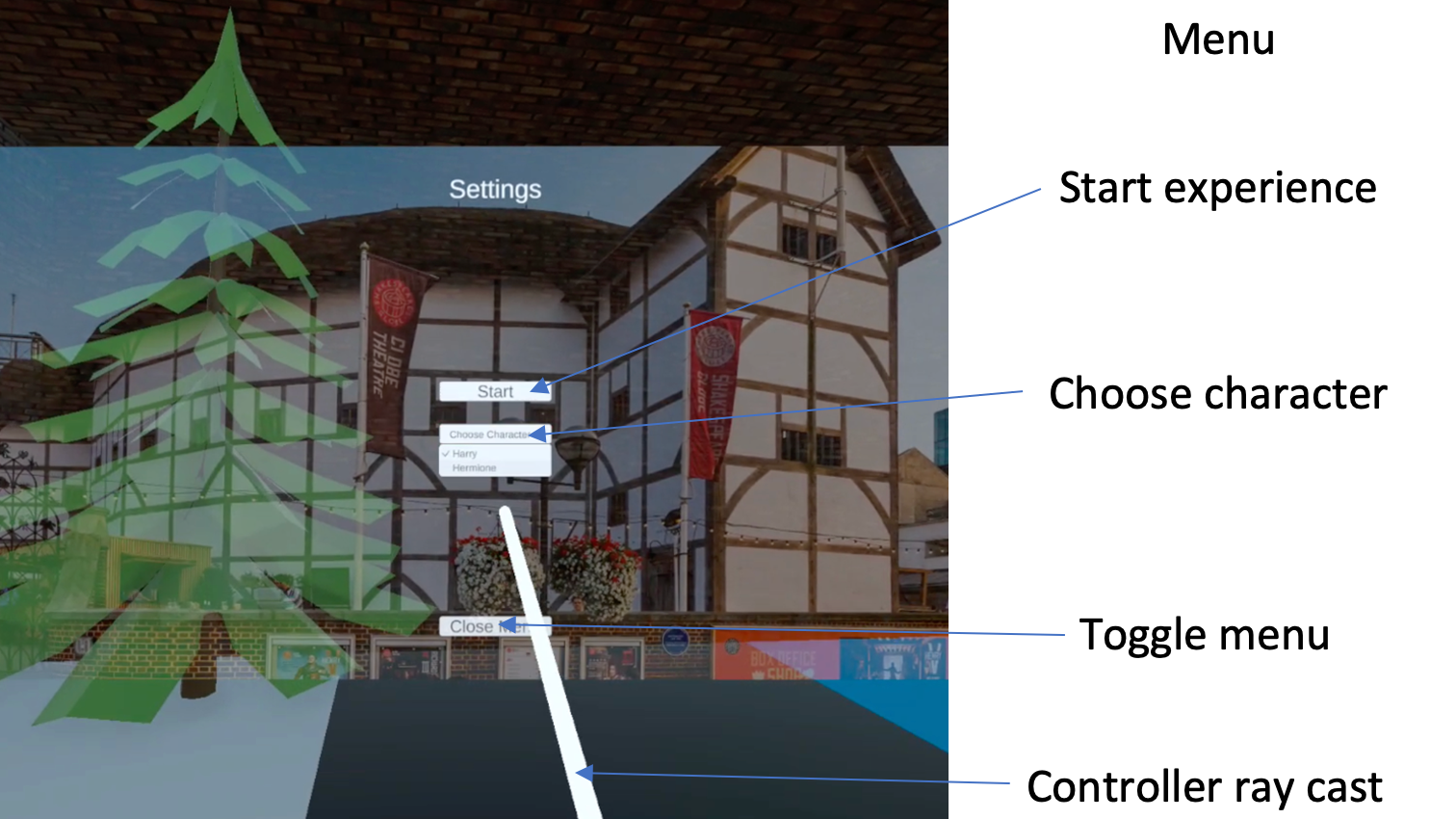

We start with a SwiftUI VIew that represents a Menu and have Navigation Links that open an Immersive space upon the user selecting either of the top two links. the "Start Experience" button changes a state variable that begins the animation of the zombie character (see video Below for clarification)

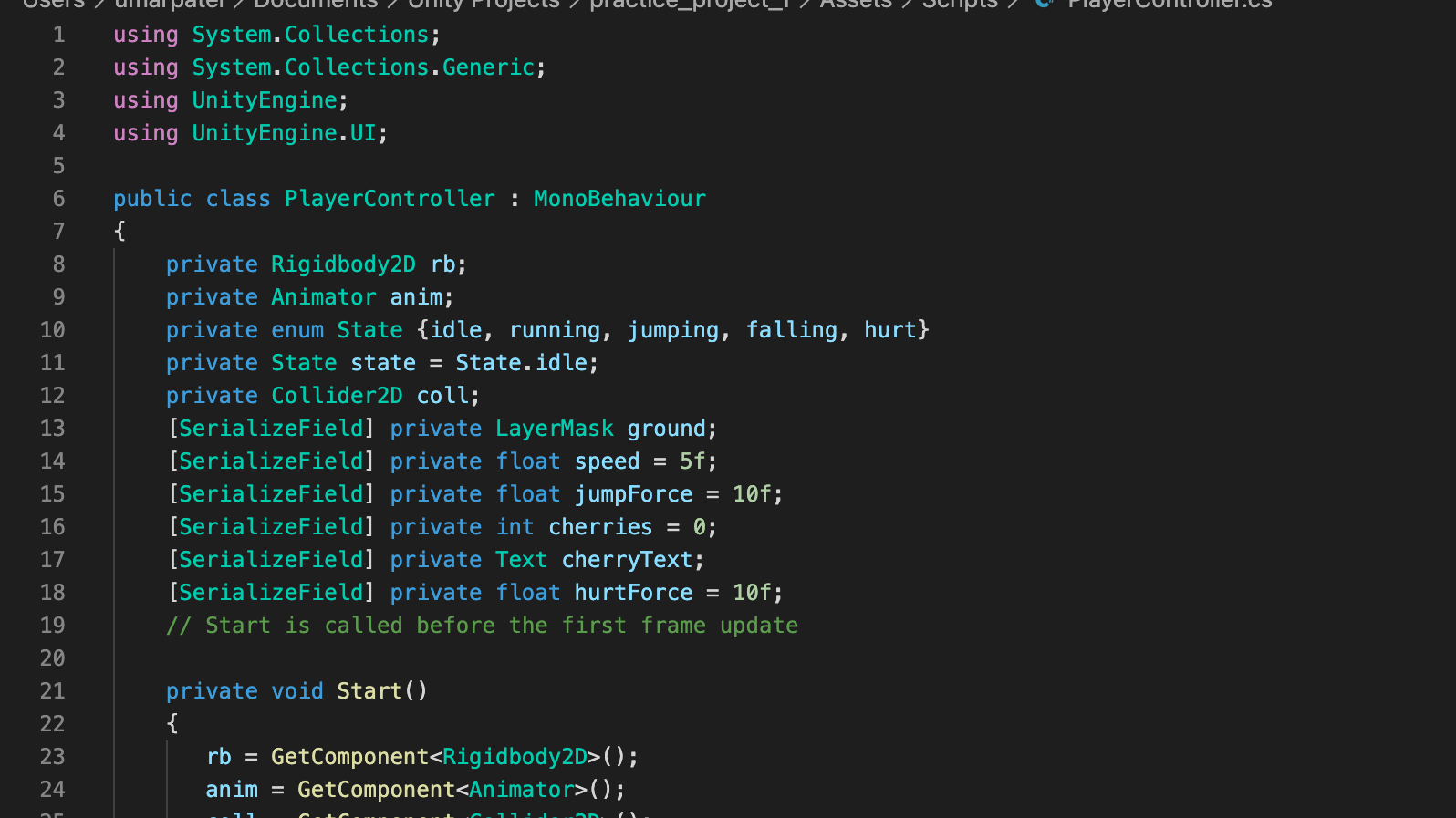

Technical components

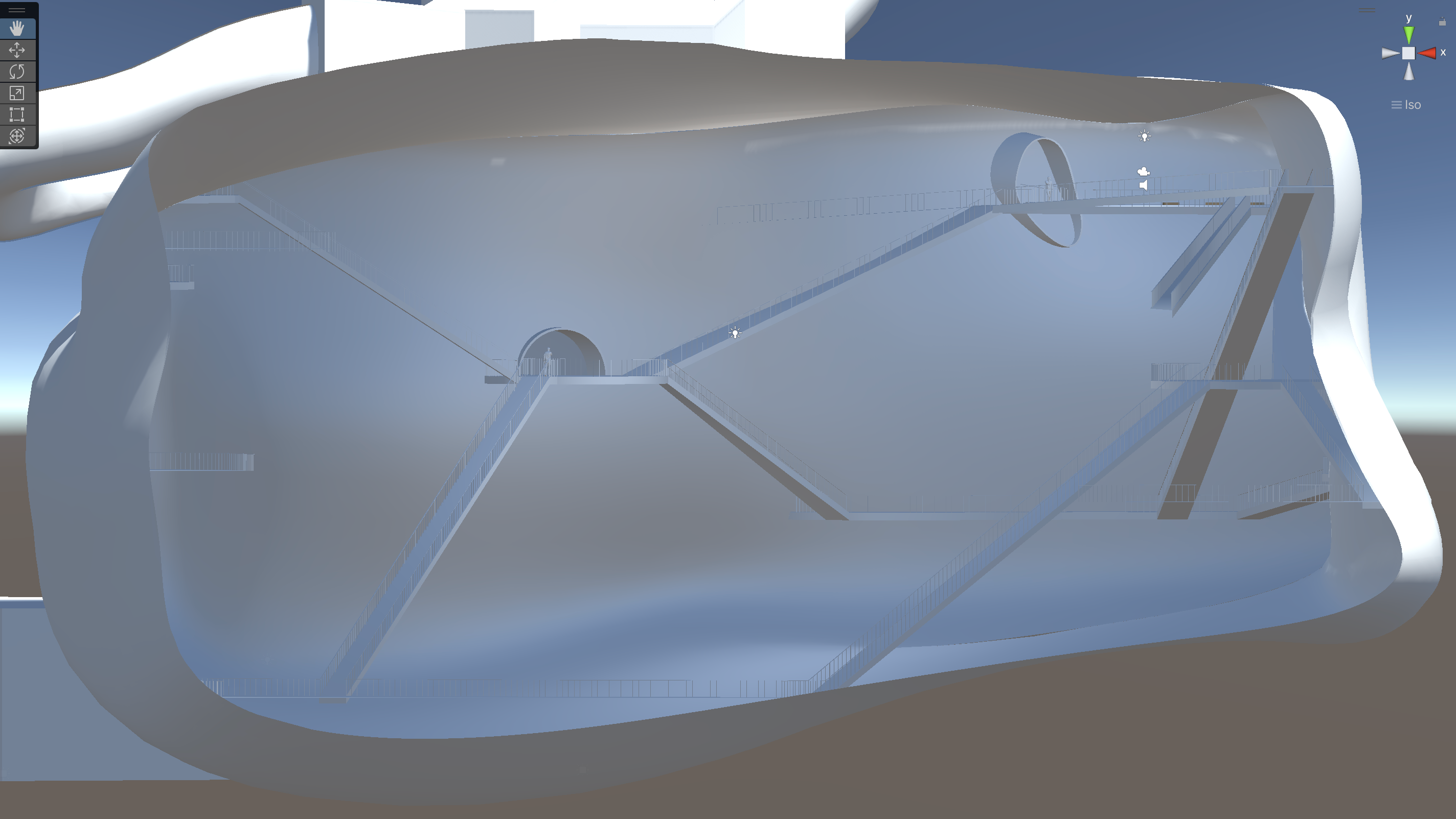

Environment set-up: place zombie in the virtual space of the simulator, with a gun placed in front of the camera.

Motion interaction between Zombie and gun: Ensure when the projectile from the gun hits the zombie the animation hit animation plays.

Spatial Tap Gesture to shoot the zombie.

special effects at the dynamic location.

Use of RealityKit’s move function and timer to create a custom animation for the zombie.

Here, we set up the AR SEssion that provides plane detection for the AR scene. This allows surfaces to be detected in the User's environment.

in the main body, we set up the zombie character entity and added the corresponding collision components that would help in interactions between the real world environment and virtual objects.

Here is a function that animates the zombie character to move in a square path.

We initially attempted to use the move function's animation playback controller value to control the animation, but we were unable to correctly implement that function.

the function "find Entity by name" allows us to find the entity in the scene that we want to change the state of.

Demo video of experience

Challenges + Future Steps

There were some SwiftUI and Reality Kit functionalities that we were not too familiar with, particularly the Animation Playback Controller.

Coordinate space was a bit confusing.

ARKit is non-existent in the simulator.

We had some trouble configuring the Animation Playback Controller which we wanted to use for the zombie. In a future iteration of this project we plan to get that working properly.

Was hard to implement hand tracking gestures within the time frame that we had. In future iterations of this app, we plan on implementing the Hand tracking gestures.