The experience focuses on French Language Learning in the context of a tourist center Right beside the Eiffel Tower in Paris (although future Versions can incorporate different languages such as Spanish, German, or Arabic and different contexts such as Travel sites like airport or Railway terminals, Street Markets, or Museums).

There are 4 stalls at the tourist center that the User can approach and begin a conversation. They are 1) A food stall, 2) an Art Stall, 3) a Travel/trip planning agency, 4) and a Souvenir Shop.

Depending on what the user would want to practice, they could walk up to the corresponding stall and begin a conversation.

Here is a demo of the experience (For reference, the user (me) was not too familiar with French)

Design Process

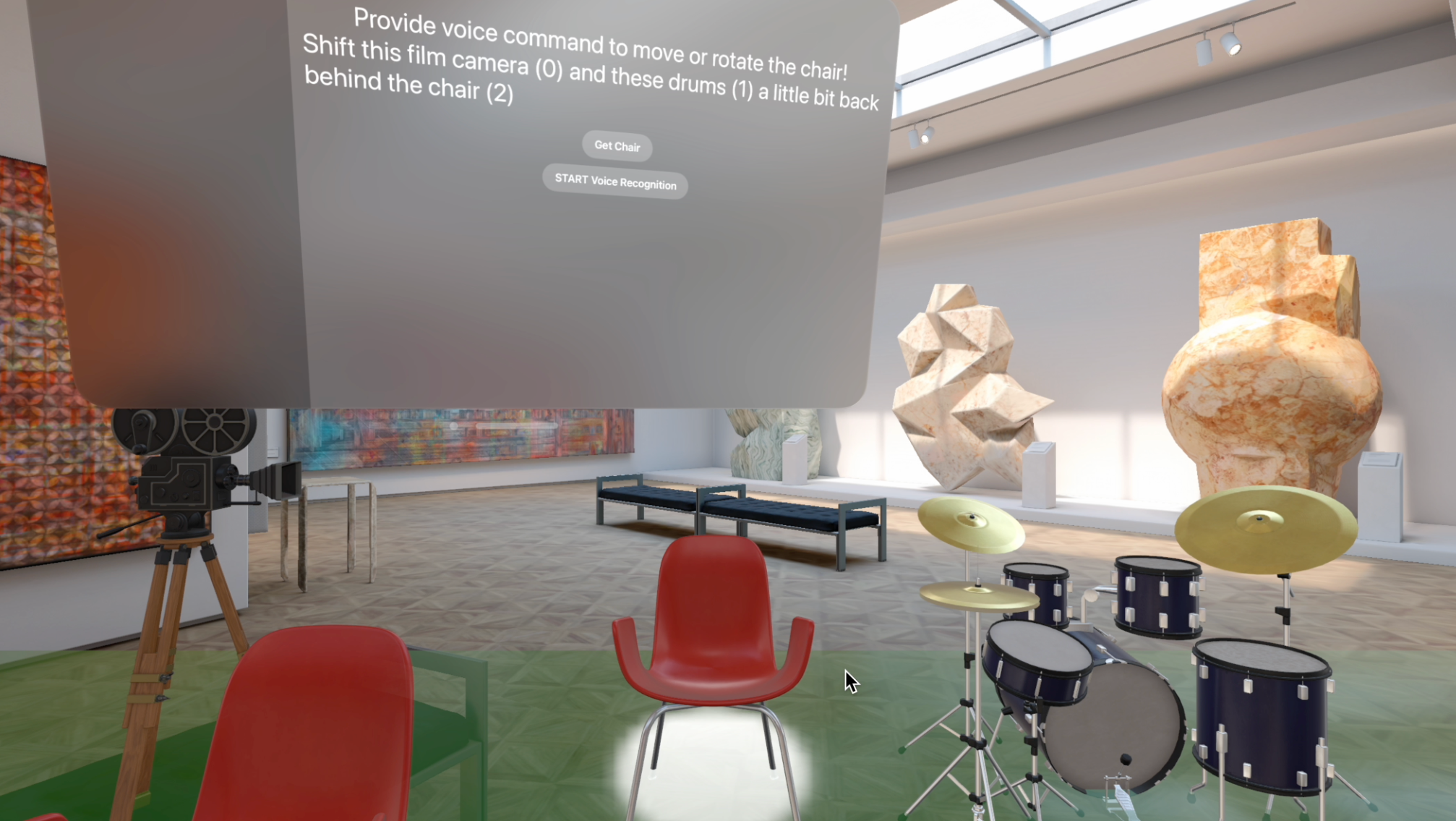

I developed the App in the Unity Editor, Using Unity's XR Interaction Toolkit, OPenXR, and Oculus XR Plugins. I also used the Oculus Voice SDK (Wit AI) in order to Handle Speech to Text Interaction.

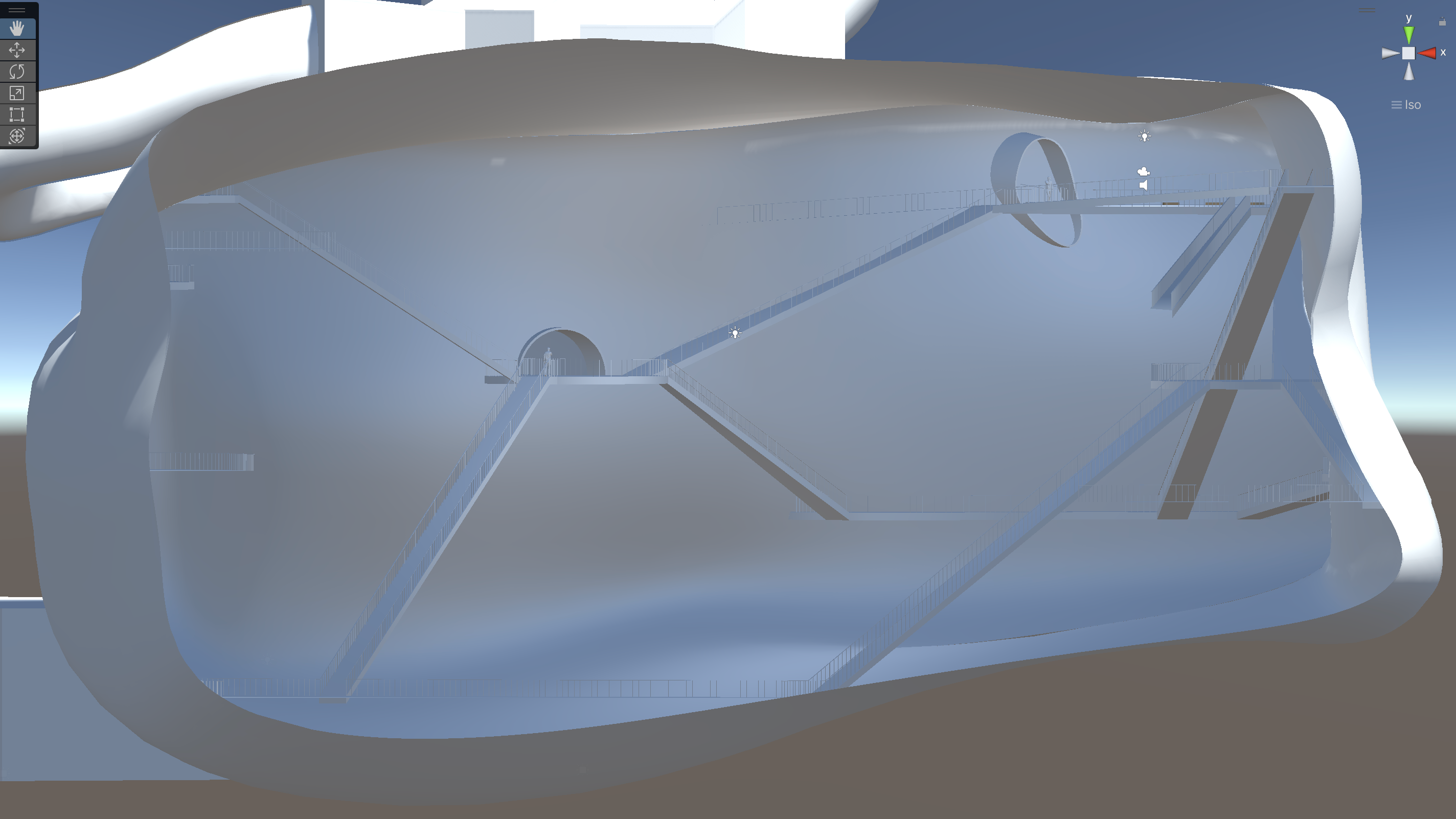

In terms of the the 3D space, I used Unity's Pro-Builder Design Tools.

Stall Structure

The structure of A single stall is as follows:

There is a platform that a user can step on for each of the 4 stalls (this helps in initiating the specific conversations).

There is a Sign in front of the stall that gives the user some options of things to buy (as this is a tourist site). In the example to the left, the user can see some options of food items that they can buy at the food stall.

There is an actual wooden stall where in Future iterations of the app I will place an Avatar or other physical items that the user can interact with.

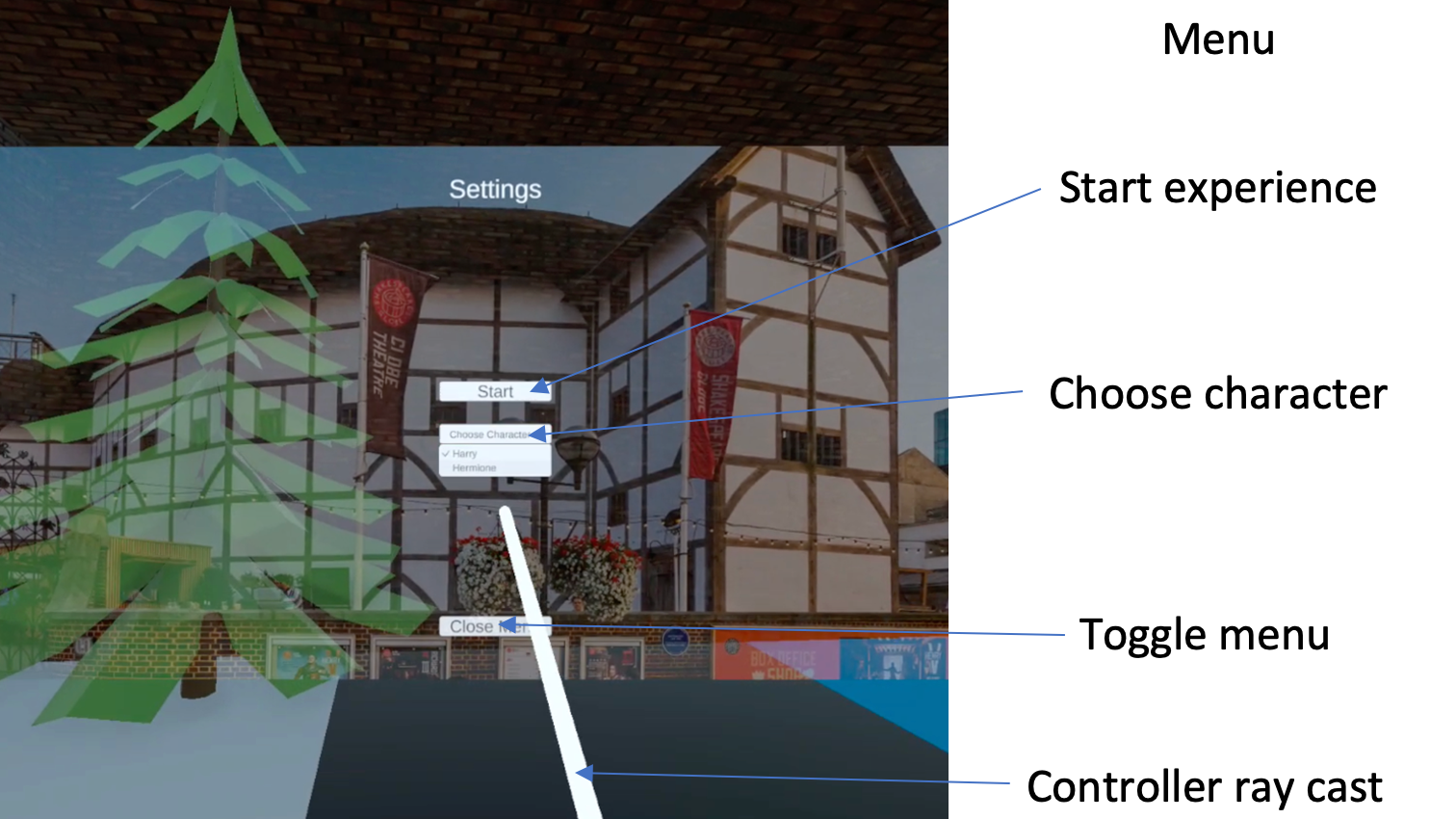

UI

The User can also select one of the 4 buttons in front of their screen to select one of the 4 categories to prompt the response handler to use that for the context-specific conversation.

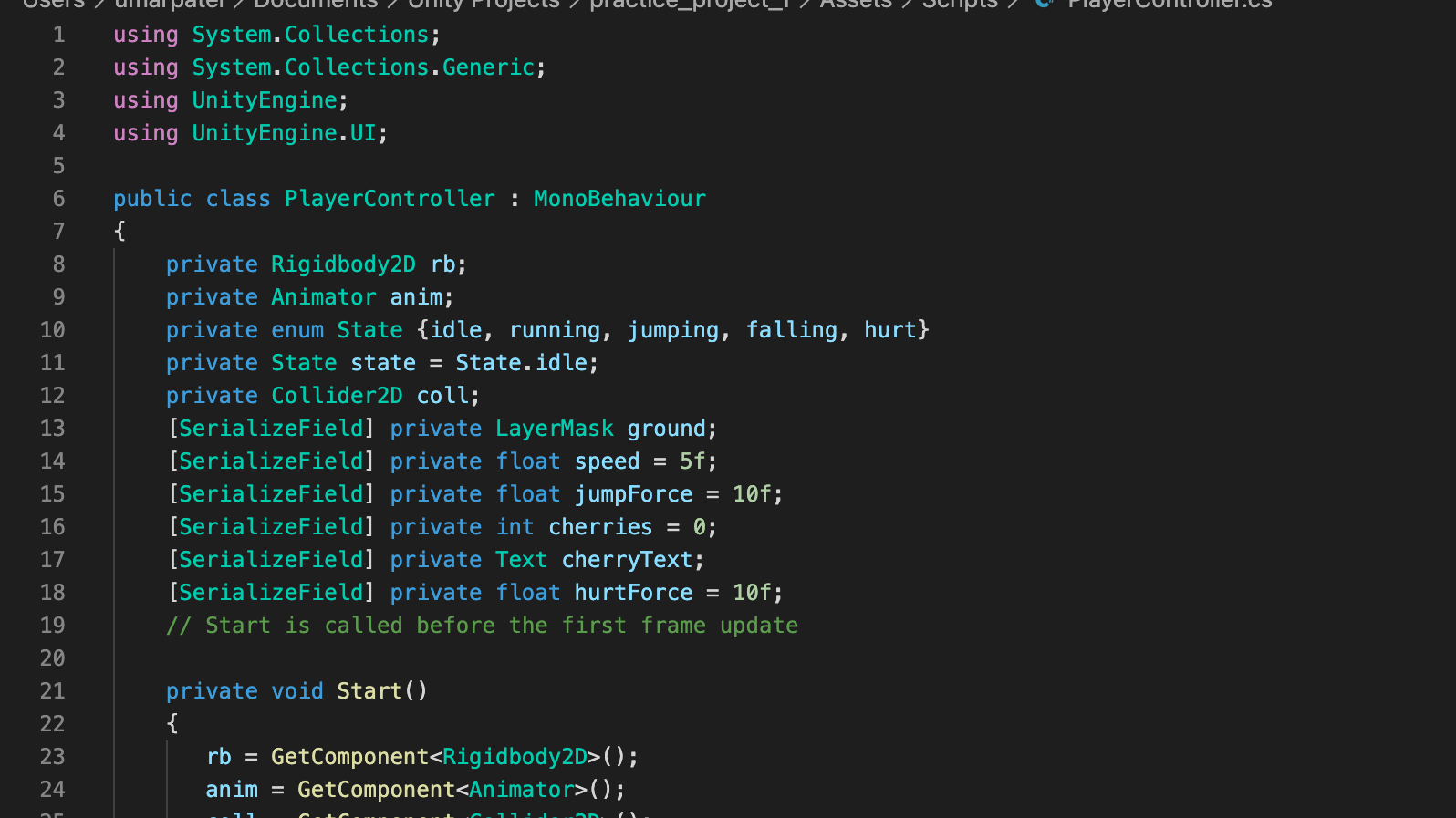

Programming and Conversation Framework

We are using OPen AI's API in order to prompt and receive context-specific responses to what the User says.

Note: the user_Utterance Variable is the text that appears in front of the user's screen. It Mainly displays instructions and the Context-specific response from the handler.

depending on the User's selection, a different context will be set. IN our Experience, our four contexts is a food stall, an activities shop, a Souvenir shop, and an Art Stall, as mentioned above.

We then generate a prompt based on the "conversation so far" (we store all of the responses from the user and the Response generator in a list to provide the system with context). This happens after the user speaks an utterance.

Additional Features

The user can utilize the buttons on the Quest Controllers to initiate an utterance. The User utterance is captured through the Oculus Voice SDK using Meta's Wit AI voice Callback handler.

Future Steps

- Include avatars for each stall so that the user feels as if they are speaking to someone in real life.

- Incorporate the Speech to text pipeline.

- Include different Contexts for French, including a street market or an Airport/Railway terminal.

- Support for multiple languages.