Motivations

Current XR Frameworks:

- Mostly Unimodal

- Gaze is usually focused on the UI and Not the Content, and cannot be simultaneously on Both

- Manual Handling of multimodal Inputs

- Highly specific Commands required for Accurate Multimodal Interaction

Swift Genie XR (Our Framework):

- Supports Multimodal Input and Commands

- Can Change State of Object while Gaze Remains at content

- Allows for Gaze and Other Gestures to Fill in Missing Information in voice or Text-based command

- Provide options for Incomplete commands

- Leverage GPT to convert voice and Gesture inputs into executable functions

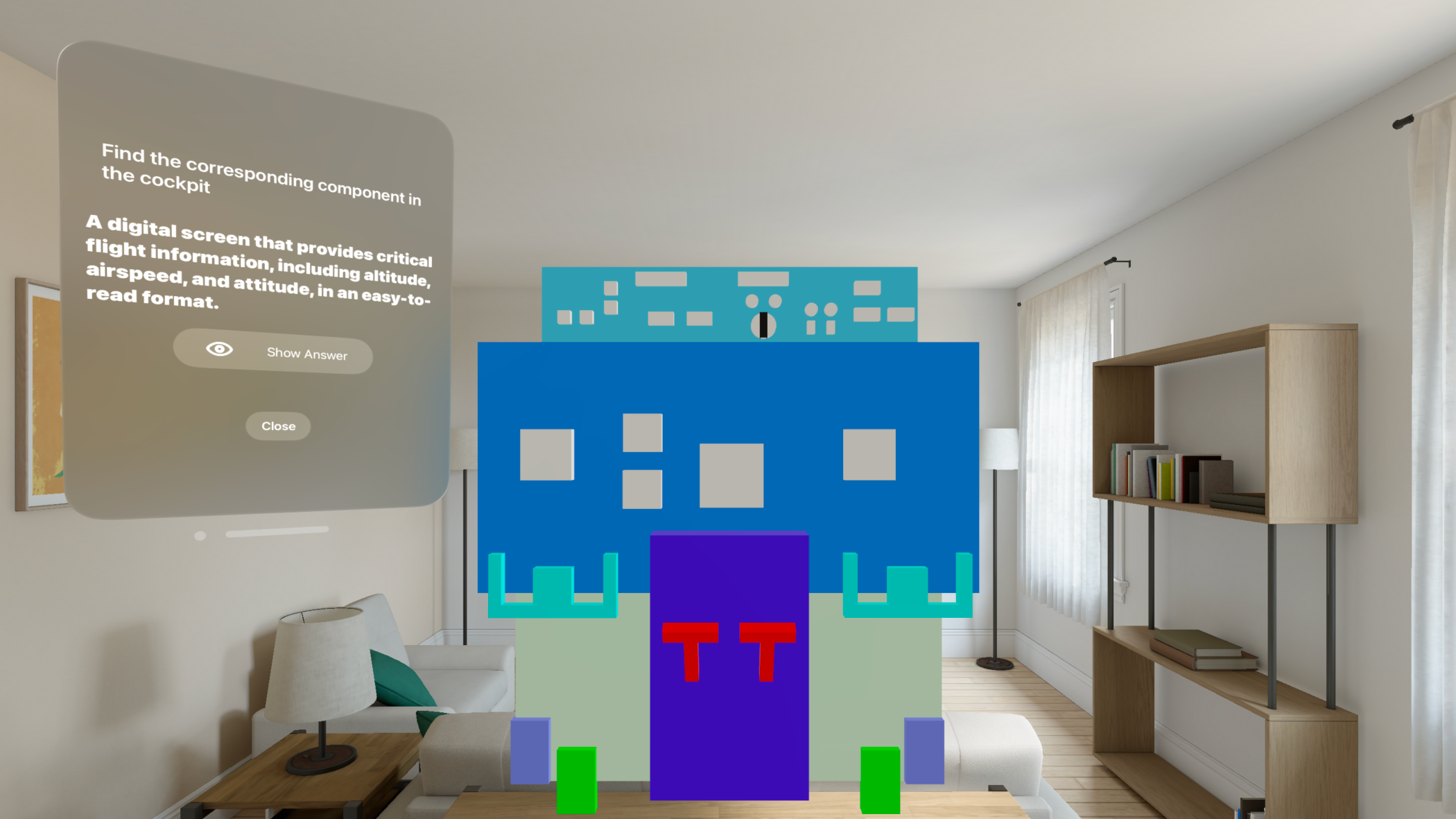

Visual Overview: Furniture Demo Application

The main multimodal application we built this summer was a furniture app, in which you could change the state and positions of furniture within a room.

We developed and tested this app using Apple's vision os Framework (which is only in its beta version) and on the Apple Vision Pro simulator on XCode (the VR headset is not yet available).

Annotated Commands fill Informational Gaps, assisted by Gaze and Gesture

The Debug Screen logs the User's voice command, and depicts the Annotated command for any world space interactions the user executed during the interaction (i.e., click points and gestures).

the numbers enclosed in Parentheses represent each of the interactions made in world space. (0) represents tapping on the film camera, (1) represents tapping the drums, and (2) represents tapping the chair.

Swift Genie XR Stores information of each entity in world space within its backend, so it can access information about all referenced objects during a command.

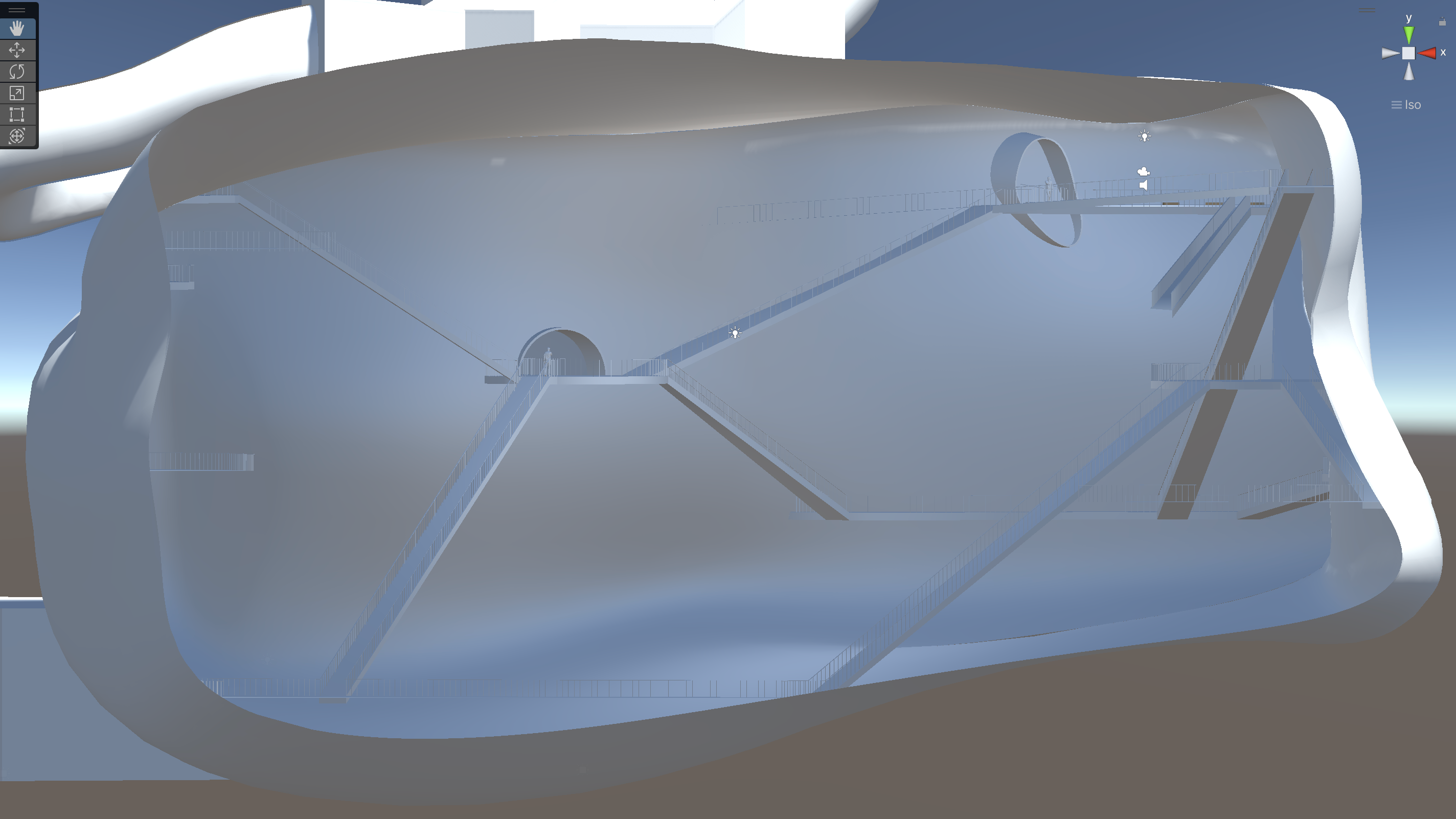

Below is an Overview for how to convert the User's World Space interaction to an annotated command.

A developer can create a custom ClickPoint depending on his or her application. For our Application, all click Points in world Space will be either a "Furniture" entity or an "Environment" entity.

We Also store the entity itself (which contains information about it's size and features), as well as the position of our click gesture (where in the world space did our interaction take place).

We then combine the Raw Command and the Clicked Entity Points to Annotate the Raw command by labeling the sentence with the entity clicks.

We use time stamps to determine when which word(s) in the command correspond to the entity clicks in world space.

The result is an annotated string similar to what you see in the debug screen in images above.

Swift Genie XR converts Annotated Command into an Executable action through DSL (Domain-Specific Language) Interpreter

Here are a Couple examples:

Notice how the annotations are translated into the Executable Command (right).

The executable call is written in Swift, with customizable functions created by the developer.

Swift Genie XR Backend transforms Command into action

Other KEY Features of Swift Genie XR Framework

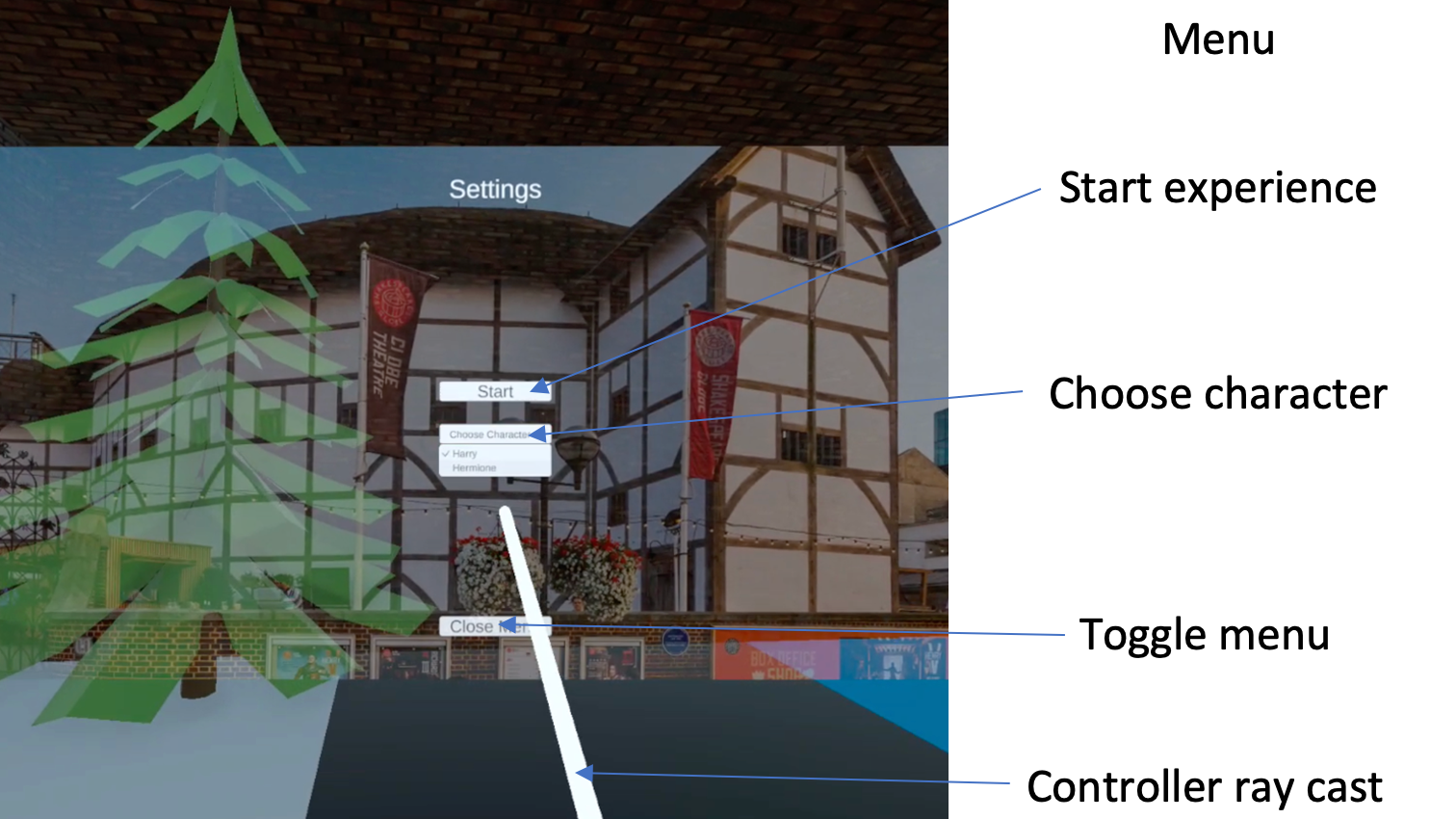

Unfinished or incomplete Commands are handled through User prompting

current Mixed reality frameworks require very specific commands in order to create an executable call that could be performed accurately. However, our framework is able to handle incomplete commands by recognizing missing information and prompting the user to fill in the informational gaps.

Notice how when the user says to change the "color of this chair", a UI scroll list appears on top of the entity the User "clicked" to change the color of.

In this case, the user asks to change the size of the chair, prompting Swift Genie XR to activate the size-changing UI for the object of interest (in this case, the chair).

Here, the User asks to rotate the chair, prompting the framework to provide a rotation UI that allows the user to change the rotation of the object Manually.

Swift Genie XR Supports Multiple-Object Inputs and order-dependent annotations.

Swift Genie XR supports multiple entities being referenced in a single command, and also handles Different orderings of those entities when the annotated command is passed to the DSL.

DSL Function (for above): "Here (0) is Where I want this chair (1)"

Entity.current(1).setPosition(position:(0, 0, -1), relativeTo:Entity.current(0))

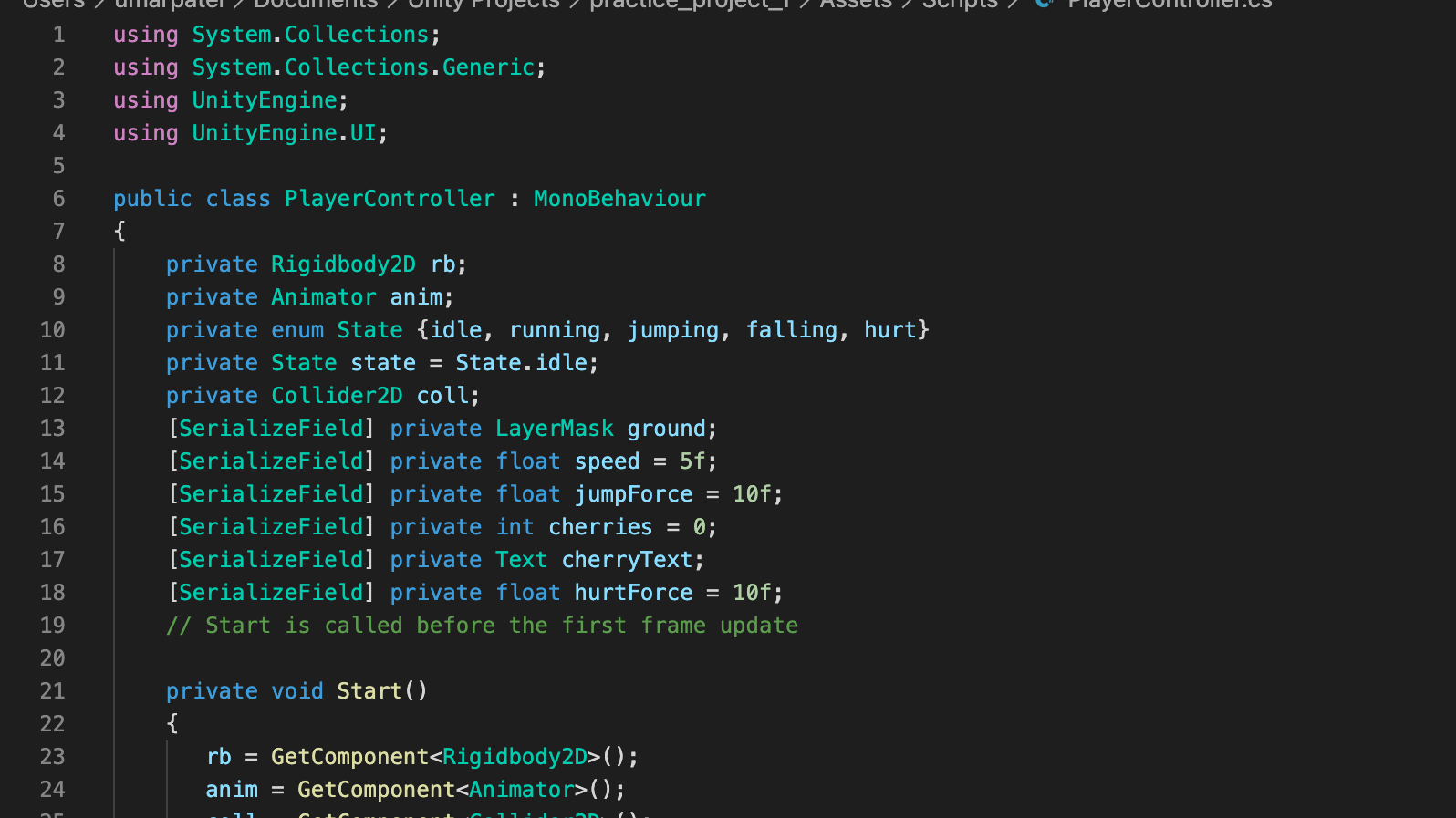

More on Swift Genie DSL

The developer creates an annotated class based on their application. Swift Genie Macro will explain the class and function declaration to gpt clearly. The developer also provides some few-shot learning examples for the program to use. Finally, the DSL interpreter converts the parsed and annotated command into the executable function call.

Project Summary and Overview